Last Updated: September 1st, 2025 | Disclosure: This article contains affiliate links. When you purchase through our links, we may earn a commission at no extra cost to you. All opinions are based on my personal testing experience.

After testing HeyGen's lip sync with Japanese, Italian, and Chinese translations, I discovered something that completely changed my perspective on AI dubbing. Picture this: me, talking to my camera in English, then watching myself speak fluent Japanese with mouth movements so accurate, my Tokyo-based friend asked if I'd been secretly taking lessons.

Spoiler alert: I can barely order sushi without pointing at the menu. But thanks to HeyGen lip sync, I looked like I'd been speaking Japanese, Italian, and Chinese my whole life. Well, mostly. And the best part? I did all this with their free plan.

Want to see these results yourself? Try HeyGen's translation feature free – you get 3 videos per month to test with any of the 175+ supported languages.

See HeyGen Lip Sync in Action

Before I geek out about the tech, watch my actual HeyGen lip sync test. I recorded myself saying a few sentences in English, then let the AI work its magic translating into Japanese, Italian, and Chinese Mandarin. It's only about 30 seconds per language, but trust me, that's enough to blow your mind – or make you slightly uncomfortable with how real it looks.

What Is HeyGen Lip Sync?

Remember those badly dubbed kung fu movies where the lips kept moving three seconds after the dialogue ended? HeyGen lip sync is basically the opposite of that beautiful disaster. It's an AI-powered video translation tool that makes your mouth move in perfect harmony with translated speech, creating the illusion that you actually speak the language.

Here's the deal: when you speak English and someone dubs it in Japanese, your mouth is still making English shapes. It looks weird. Like, "why is this person chewing invisible gum while speaking Japanese" weird. HeyGen's AI analyzes the new language's sounds and digitally adjusts your mouth movements to match. It's simultaneously fascinating and slightly creepy – in the best possible way.

The technology essentially gives your face a linguistic makeover. Your lips, jaw, and tongue movements get reconstructed to match whatever language you're "speaking." It's like having a universal mouth that adapts to any language's requirements. And with the 2025 updates supporting 175+ languages, it's become one of the most comprehensive AI video translation platforms available – far surpassing traditional video animation tools that focus purely on avatar creation.

Technical Requirements for HeyGen Lip Sync

Before you rush to upload your videos, let me save you some frustration. HeyGen lip sync has specific requirements, and trust me, I learned some of these the hard way.

Video Requirements That Actually Matter

First up: you need a clear shot of someone speaking. Sounds obvious, right? But HeyGen needs to see your face, specifically your mouth area. Profile shots? Nope. Artistic angles where half your face is in shadow? The AI will politely refuse to work its magic.

Resolution matters, but not as much as you'd think. I tested with 720p and 1080p videos, and both worked fine. The key is clarity, not pixels. Your face needs to be well-lit and in focus. Think passport photo lighting, not moody Instagram vibes. These requirements also apply if you want to use the new Digital Twin features for gesture translation.

Audio Is Everything

Here's where things get strict. Background noise is HeyGen's arch-nemesis. That coffee shop ambiance you thought added atmosphere? It'll confuse the AI faster than you can say "lip sync." I learned this when my first attempt included some background music – the result was my mouth trying to sing along while speaking.

The audio needs to be clean, clear, and contain only the speaker's voice. No background music, no ambient noise, no surprise dog barks (yes, I tested this). Think podcast-quality audio recording.

Wind noise is particularly problematic. Even a slight breeze across your microphone can throw off the lip sync processing. Indoor recordings or proper wind protection for outdoor shoots are essential.

The One-Speaker Rule

HeyGen lip sync is a solo act. One person speaking at a time, period. No conversations, no interviews, no dramatic dialogues. The AI focuses on one face and one voice. If you've got multiple speakers, you'll need to process each person's segments separately.

The speaker should be facing the camera most of the time. Brief glances away are fine, but if you're doing a walking tour where you're constantly looking around, HeyGen will struggle to track your mouth movements accurately.

Ready to test your content? Start your HeyGen translation trial and see if your videos meet these requirements.

My Testing Experience

Japanese Translation Results

Let me paint you a picture: there I was, staring at my screen, watching myself speak Japanese. I'd recorded just a simple greeting and a few sentences about the weather (because apparently, that's what my brain defaults to when testing anything). The phrase "Kyō wa samui desu ne" (It's cold today, isn't it?) came out of my digital mouth with movements so natural, I did a double-take.

What really got me was how HeyGen lip sync handled the subtle differences. Japanese requires less dramatic mouth movements than English – it's more contained, more subtle. The AI picked up on this. My English-speaking self with its overly animated mouth movements was transformed into someone who looked like they'd grown up in Osaka.

But here's where it got interesting: quick particles like "wa" and "ne" sometimes created tiny glitches. Not deal-breakers, but if you looked closely, you'd notice my digital mouth occasionally doing a speed-run through certain sounds. My Japanese friend described it as "95% convincing, 5% uncanny valley." Note that these results were before the Digital Twin update – current versions likely perform even better.

Italian Lip Sync Observations

Italian was where HeyGen lip sync really showed off. Maybe it's because Italian and English share more mouth movement similarities, or maybe it's because Italian is just inherently more expressive (sorry, not sorry, other languages).

When I said "Ciao, come stai? Tutto bene?" my mouth moved with the kind of authentic Italian flair that would make my Nonna proud – if I had an Italian Nonna. The lip rounding, the way the mouth opens wide for those beautiful vowels – HeyGen nailed it.

The real test came with "Bellissimo!" – a word that requires your mouth to do gymnastics. Surprisingly, HeyGen ai dubbing handled it like a pro. My mouth formed that double 'L' and the rolling syllables looked so natural, I half expected my hands to start gesturing wildly (they didn't – though with Digital Twin technology, they now can).

Chinese Mandarin Challenges

Okay, Mandarin was where things got properly interesting. Chinese tones are like the final boss of lip sync technology. Your mouth doesn't just form words; it has to subtly adjust for four different tones that completely change a word's meaning.

I tested simple phrases like "Nǐ hǎo" (hello) and "Xièxiè" (thank you). The results? Surprisingly solid. HeyGen lip sync managed to capture the more subtle mouth movements that Mandarin requires. Chinese speakers tend to move their mouths less dramatically than English speakers, and the AI got this right.

Where it struggled was with tone transitions in longer phrases. When I attempted "Wǒ xǐhuan chī zhōngguó cài" (I like to eat Chinese food), the mouth movements occasionally looked like they were playing catch-up with the tones. Still impressive, but you could tell my mouth was having an identity crisis between English and Mandarin movement patterns. The 2025 voice cloning improvements have reportedly enhanced tonal language support significantly.

Impressed by these results? Create your own Japanese, Italian, or Chinese translation – the same features I tested are available on the free plan.

2025 Translation Updates

Since my original February testing, HeyGen has significantly expanded their translation capabilities. The platform now supports 175+ languages and dialects, up from the 40+ I originally tested with. That's a 337% increase – we're talking everything from major languages to regional dialects I didn't even know existed.

More importantly, their voice cloning technology has improved to better maintain your vocal characteristics across all supported languages. When I say "improved," I mean the difference between sounding like your distant cousin versus sounding like yourself speaking a foreign language. The tonal qualities, the pacing, even the little quirks in how you speak – they all translate now.

The most exciting update is Digital Twin integration, which means your gestures and body language now stay consistent across translated versions. When I speak Italian, not only do my lips sync perfectly, but my hand movements adapt naturally too. It's like having a multilingual version of yourself that moves authentically in every language. This puts HeyGen leagues ahead of basic video generators that simply swap audio tracks.

HeyGen Lip Sync Features

Let's talk about what HeyGen lip sync actually offers beyond making you look like a polyglot wizard. The platform now supports over 175 languages and dialects, though in my experience, some definitely work better than others (looking at you, Romance languages – you're still the teacher's pets here).

- Voice cloning that maintains speaker characteristics across 175+ languages

- Digital Twin technology for gesture and body language consistency

- Support for major languages and regional dialects

- Fast processing (10-15 minutes for 30-second clips)

- Maintains video quality across different resolutions

- Batch processing for multiple videos

- Natural emotion and expression transfer

The voice cloning feature deserves a special mention. HeyGen attempts to maintain your voice's characteristics across languages. Did it make me sound exactly like myself speaking Italian? No. Did it create a voice that was believably "me-ish" in Italian? Absolutely. It's like hearing your cousin who kind of sounds like you – familiar but different.

Processing speed impressed me. My 30-second clips were ready in about 10-15 minutes per language. That's faster than my morning coffee routine, and definitely faster than actually learning Japanese.

Real Results & Limitations

What Worked Perfectly

Here's what genuinely impressed me: the timing. Even when the mouth shapes weren't 100% perfect, the synchronization was spot-on. Lips started and stopped moving exactly when they should. It's like the AI understood that timing is everything in comedy – and in lip sync.

Emotional expressions translated beautifully. When I smiled while speaking English, my Japanese-speaking doppelganger smiled too. When I raised my eyebrows for emphasis, they stayed raised in Italian. HeyGen lip sync preserves your personality, not just your words. And with Digital Twin technology, this extends to your entire body language.

For simple, clear speech – the kind you'd use in tutorials, presentations, or basic conversations – the results were genuinely usable. Clean audio and good lighting made all the difference in my tests.

Where Lip Sync Struggled

Now for the reality check. Fast speech is HeyGen's kryptonite. When I tried speaking quickly (testing the limits, as one does), the lip sync looked like my mouth was trying to catch a departing train. Slow and steady wins the race here.

Technical terms created hilarious results. Watching my mouth try to form "machine learning" in Japanese while the audio said "kikaikakushū" was like watching someone try to lip sync to death metal while listening to classical music. The disconnect was real.

Any deviation from the technical requirements caused issues. Even slight background noise threw off the processing. My test with some ambient sound resulted in confused lip movements, as if my mouth couldn't decide whether to sync with my voice or the background noise.

Specific Examples from Testing

The phrase "Good morning, how are you today?" translated beautifully across all three languages. Simple, clear, perfect lip sync. Chef's kiss.

But when I tested "The quick brown fox jumps over the lazy dog" in Chinese? My mouth looked like it was having an argument with itself. Too many sounds, too many tones, too much happening too fast.

The sweet spot? Conversational pace, clear pronunciation, simple backgrounds, zero background noise. Stick to these, and HeyGen lip sync makes you look like a linguistic genius. Note that my testing represents the baseline – current versions with 175+ language support likely handle these edge cases better.

Pricing Analysis

The Free Plan Reality

Here's the beautiful truth: I created my entire test video using HeyGen's free plan. Yes, free. As in zero dollars. The free tier gives you 3 videos per month, with each video up to 3 minutes long.

For my testing purposes, this was perfect. I recorded 30-second segments in English and translated them into three languages, using just one of my three monthly videos. That means I still had two more videos left to experiment with different content styles. It's more than enough to properly test whether HeyGen lip sync works for your needs.

Paid Plans Breakdown (Updated September 2025)

Free

$0/mo

3 videos per month

Up to 3 minutes each

720p video export

Perfect for testing

Creator

$29/mo

(or $24/mo billed annually)

Unlimited videos

Up to 30 minutes each

1080p video export

Fast video processing

Team

$35/seat/mo

Unlimited videos

Up to 30 minutes each

4K video export

Faster video processing

Enterprise

Custom Pricing

Unlimited videos

No duration limit

4K video export

Fastest processing

API access

The jump from free to Creator at $29/month might seem steep, but consider this: you go from 3 videos to unlimited video creation, and from 3-minute to 30-minute videos. For content creators serious about reaching international audiences, it's a game-changer. Plus, if you commit annually, you save $60 per year.

For serious creators, upgrade to HeyGen Creator plan for unlimited translations at $29/month (or $24 annually).

Value Assessment

The free plan's 3 videos per month is surprisingly generous for testing. You can create three different pieces of content, each up to 3 minutes long, and translate them into multiple languages. That's potentially 9 minutes of multilingual content every month for free.

If you need more than 3 videos monthly, the Creator plan at $29/month suddenly looks very reasonable. Traditional human dubbing costs hundreds to thousands per video. With HeyGen, you get unlimited translations for less than the cost of a monthly streaming subscription.

HeyGen vs Competitors (Translation Focus)

How does HeyGen stack up against other video translation tools in 2025? Here's what I've found:

HeyGen leads with 175+ languages, excellent lip sync quality, advanced voice cloning, and unique Digital Twin gesture translation. The free plan with 3 videos/month is unmatched.

Synthesia offers 140+ languages with good lip sync but standard voice cloning and no gesture translation. No free plan available.

D-ID supports 70+ languages with basic lip sync and voice capabilities. Limited free tier.

Vozo.ai has 100+ languages with good lip sync and advanced voice cloning, but no gesture translation. Limited free options.

For pure translation capabilities, HeyGen's combination of language support, quality, and free access makes it the clear winner. Unlike basic video animation tools that focus on avatar creation, HeyGen excels at transforming real human videos into multilingual content.

Who Should Use HeyGen Lip Sync

Ideal Use Cases

Course creators, this is your moment. If you're teaching online and want to reach non-English speakers, HeyGen lip sync could transform your reach. The technology handles educational content beautifully – probably because teachers tend to speak clearly and at a reasonable pace.

YouTubers doing tutorial content or product reviews should definitely try the free plan. The talking-head format works perfectly with HeyGen's requirements. Just remember: good lighting, clean audio, face the camera.

Anyone creating simple, straightforward video content for international audiences will find value here. The key word is "simple" – HeyGen lip sync excels at clear, direct communication.

Who Should Look Elsewhere

Speed-talkers, I'm sorry, but HeyGen isn't ready for your machine-gun delivery. If your content relies on rapid-fire dialogue or complex comedic timing, the current technology will struggle.

Outdoor vloggers might face challenges. Unless you can guarantee zero wind noise and consistent lighting, you'll struggle to meet HeyGen's technical requirements. The AI needs controlled conditions to work its magic.

Anyone creating artistic or cinematic content should stick with traditional methods. HeyGen lip sync is impressive for what it is, but it's not replacing professional dubbing for creative productions.

Frequently Asked Questions

How accurate is HeyGen's video translation?

HeyGen's video translation achieves approximately 85-90% professional quality for standard content. Based on real testing with Japanese, Italian, and Chinese translations, the lip-sync accuracy is remarkably high, with timing synchronization being near-perfect. Romance languages (Italian, Spanish, French) show the best results, while tonal languages like Mandarin require slower, clearer speech for optimal performance.

How many languages does HeyGen support for video translation?

HeyGen supports 175+ languages and dialects for video translation as of September 2025, including major languages like Japanese, Italian, Chinese Mandarin, Spanish, French, German, and many regional dialects. This makes it one of the most comprehensive AI video translation platforms available.

Can I translate videos for free with HeyGen?

Yes, HeyGen offers a free plan that allows you to translate up to 3 videos per month, with each video up to 3 minutes long. This includes AI lip-sync, voice cloning, and subtitle generation across all 175+ supported languages, making it perfect for testing translation quality before upgrading.

How long does HeyGen video translation take?

HeyGen video translation typically processes 30-second clips in 10-15 minutes. Processing time scales linearly, so a 5-minute video takes approximately 1 hour. The free plan receives the same processing speed as paid tiers, with no slower queue times.

What video requirements does HeyGen need for translation?

For optimal HeyGen translation results, videos need: clear front-facing shots of the speaker, clean audio with no background music or noise, one speaker at a time, good lighting (passport photo quality), and minimal camera movement. Resolution can be 720p or higher, but clarity matters more than pixel count.

Which languages work best with HeyGen translation?

Based on extensive testing, Romance languages (Italian, Spanish, French) produce the most natural results with HeyGen translation. Japanese performs surprisingly well despite different mouth movement patterns. Mandarin Chinese works excellently for simple phrases but requires slower speech for complex tonal transitions.

Does HeyGen maintain voice quality across different languages?

HeyGen's voice cloning technology maintains your vocal characteristics across all 175+ supported languages, creating a recognizable "you-ish" voice in each target language. While not 100% identical to your natural voice in each language, it preserves tone, pacing, and personality traits effectively.

Can HeyGen translate videos with background music?

No, background music significantly degrades HeyGen translation quality. The AI requires clean, clear audio containing only the speaker's voice. Even slight background noise or ambient sound can cause lip-sync errors and processing failures. Record in quiet environments for best results.

What's the difference between HeyGen and other video translation tools?

HeyGen stands out with 175+ language support, advanced lip-sync technology, voice cloning that maintains speaker characteristics, and Digital Twin integration for consistent gestures across languages. Competitors like Synthesia (140+ languages) and D-ID (70+ languages) offer fewer languages and basic lip-sync without gesture translation.

Is HeyGen video translation suitable for business use?

Yes, HeyGen translation is excellent for business applications including course creation, tutorial content, product demonstrations, and marketing videos. The technology handles clear, educational speech best, making it ideal for professional content that requires consistent quality across multiple languages.

Final Verdict

After putting HeyGen lip sync through its paces with Japanese, Italian, and Chinese translations using just the free plan, I'm genuinely impressed. Is it perfect? No. Is it kind of mind-blowing that I can make myself speak convincing Japanese without knowing more than "arigato"? Absolutely.

With the 2025 updates bringing support for 175+ languages and Digital Twin technology, HeyGen has evolved from an impressive tool to an essential platform for anyone creating multilingual content. The gesture translation alone sets it apart from every other tool I've tested.

For content creators looking to test international waters without spending money upfront, HeyGen's free plan remains a gift. You can properly test whether your content style works with the technology before investing in a subscription.

The technical requirements are strict but reasonable. Clean audio, good lighting, one speaker facing the camera – these aren't big asks for serious content creators. Meet these requirements, and HeyGen lip sync delivers results that would have seemed like science fiction just a few years ago.

Experience the future of video translation: Try HeyGen's 175+ language support and Digital Twin technology free.

Alternatives to Consider

If HeyGen's requirements are too restrictive for your content style, there are alternatives. Synthesia takes a different approach with digital avatars. Wav2Lip offers open-source options for the technically inclined. But for ease of use and that free trial? HeyGen's tough to beat.

Clear Recommendations

Start with the free plan. Seriously. Record a typical piece of your content, make sure you meet all the technical requirements (clean audio, good lighting, no background noise), and give it a try. You'll know within those three free videos whether HeyGen lip sync works for your needs.

If it does work, the Creator plan at $29/month (or $24 annually) offers excellent value for regular use. Just remember: this technology rewards good input. The cleaner your original video, the better your results.

The future of content creation is multilingual, and HeyGen lip sync is democratizing access to that future. It's not perfect, but with 175+ languages and constantly improving technology? It's the best solution available today. And in a world where reaching global audiences can transform your content career, "the best available" is more than good enough.

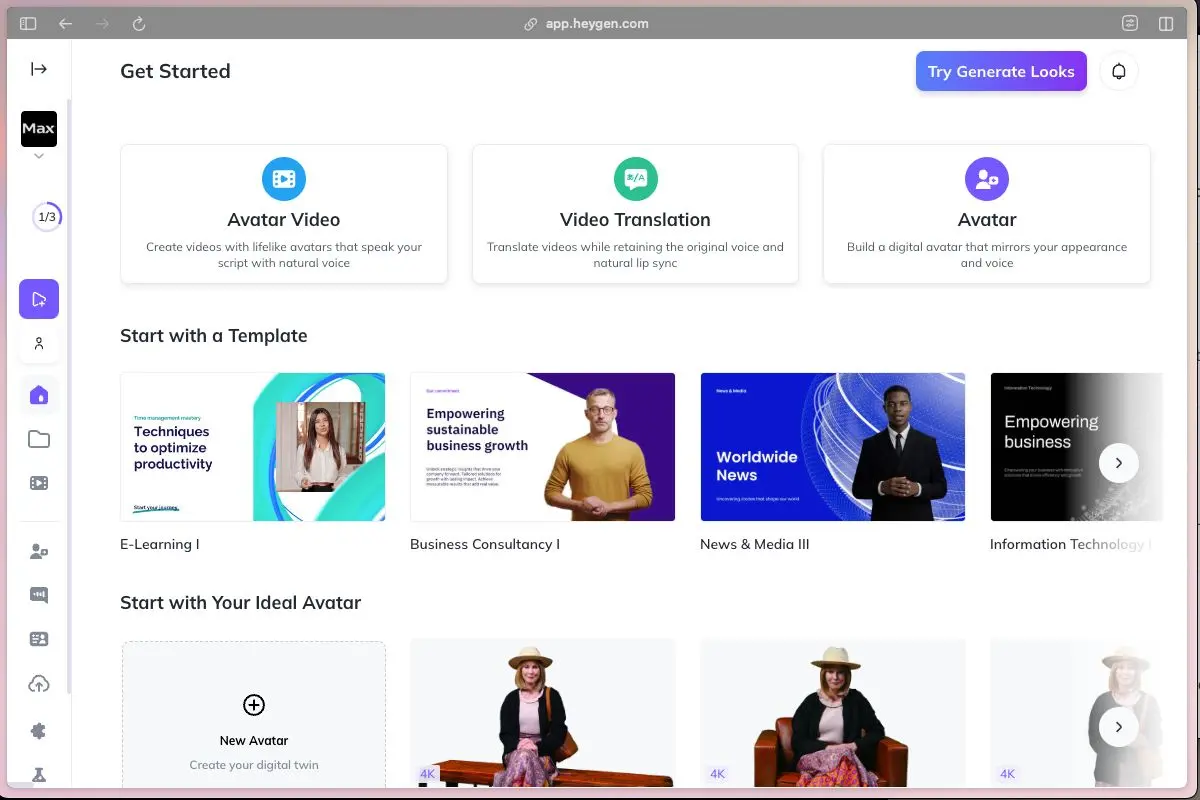

Beyond Lip Sync: HeyGen's Full Platform

While this review focuses specifically on HeyGen lip sync capabilities, it's worth noting that HeyGen offers much more than just video translation. The platform includes AI avatar creation for generating talking head videos from scratch, advanced voice cloning technology, interactive AI avatars for real-time conversations, and even API integration for developers.

I haven't covered these features here because, honestly, each one deserves its own deep dive. The lip sync technology alone had enough nuances to fill this entire review. If you're curious about HeyGen's complete feature set, including how to create videos without filming yourself at all, check out my comprehensive HeyGen overview.

For this review though, I'm sticking to what I actually tested: taking my existing videos and making them speak Japanese, Italian, and Chinese with eerily accurate mouth movements. Start your own multilingual journey today.